If you’re wondering how Veo 3.1 and Sora 2 differ in 2025, the key tradeoffs come down to maximum clip length, temporal consistency (scene continuity), audio capabilities, and visual fidelity. Below is a neutral, up-to-date comparison based on official announcements and hands-on testing with test prompts and creative workflows.

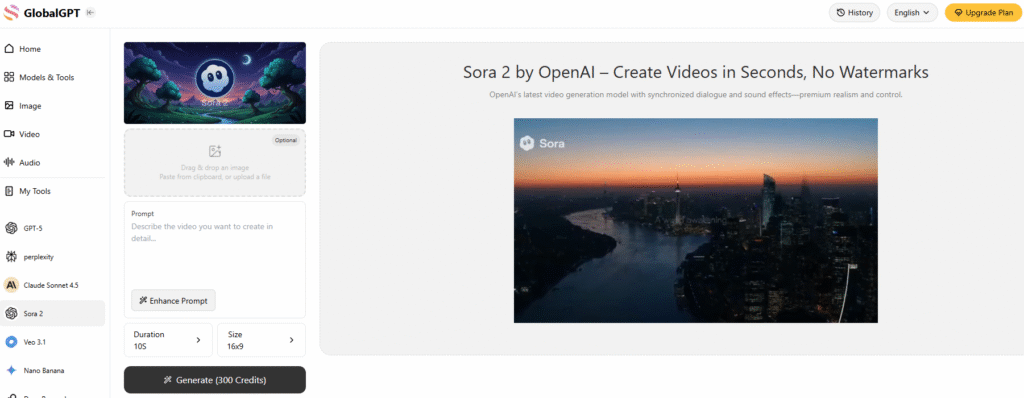

If you want to try both models, Global GPT officially integrates Sora 2 and Veo 3.1. There’s no invite code required, pricing is more affordable, and users can enjoy fewer content restrictions and watermark-free outputs.

Global GPT currently integrates Sora 2 Pro, which can generate videos up to 25 seconds long. Normally, Sora 2 Pro is only available for users with a $200/month ChatGPT Pro subscription, but with Global GPT, you can use it without the expensive subscription.

Quick Capability Snapshot: Veo 3.1 vs Sora 2

| Dimension | Google Veo 3.1 | OpenAI Sora 2 |

|---|---|---|

| Native clip length | 4, 6, or 8 seconds (extendable) | As of the October 15, 2025 update, Sora 2 allows regular users to generate up to 15-second videos, while Pro users can create videos up to 25 seconds long. |

| Resolution / FPS | 720p and 1080p, 24 FPS; extended sequences run at 720p | Official materials emphasize realism and controllability but don’t publicly itemize resolution or FPS limits |

| Audio generation | Native audio (dialogue, ambiance, effects) is built in across modes | Synchronized dialogue, ambient sound, and SFX are supported per OpenAI’s Sora 2 announcement |

| Consistency / continuity tools | Supports up to three reference images, first/last frame bridging, and video extension to maintain identity across frames | OpenAI claims stronger physics and temporal coherence than prior versions; explicit reference-image controls are less publicly documented |

| Provenance / watermark | Outputs carry a SynthID watermark and traceability tooling | Includes visible watermark and embedded provenance/C2PA metadata |

| Access & availability | Available via Gemini API / Vertex AI / Flow (with preview) | Currently invite-only Sora app; API access not yet broadly open |

Reference Documents (Updated October 17 2025)

Google Veo 3.1 Official Documentation

- Veo 3.1 Video Model Preview

Official introduction to Veo 3.1 on Google Cloud Vertex AI, including features and capabilities.

🔗 https://cloud.google.com/vertex-ai/generative-ai/docs/models/veo/3-1-generate-preview - Gemini API Video Generation Documentation

Official guide for generating videos using the Gemini API.

🔗 https://ai.google.dev/gemini-api/docs/video?hl=zh-cn - Veo + Flow Updates Announcement

Google blog post detailing the Veo 3.1 and Flow updates, including audio and narrative control improvements.

🔗 https://blog.google/technology/ai/veo-updates-flow/ - Generate Videos from Text Guide

Step-by-step instructions for creating videos from text prompts using Veo 3.1.

🔗 https://cloud.google.com/vertex-ai/generative-ai/docs/video/generate-videos-from-text?hl=zh-cn

OpenAI Sora 2 Official Documentation

- Sora 2 Overview

Official introduction to Sora 2, covering features and capabilities.

🔗 https://openai.com/zh-Hans-CN/index/sora-2/ - Sora 2 System Card (PDF)

Detailed PDF describing Sora 2’s capabilities, limitations, and safety guidelines.

🔗 https://cdn.openai.com/pdf/50d5973c-c4ff-4c2d-986f-c72b5d0ff069/sora_2_system_card.pdf - Launching Sora Responsibly

Official OpenAI guidelines on safety, compliance, and responsible usage.

🔗 https://openai.com/zh-Hans-CN/index/launching-sora-responsibly/

Veo 3.1: Strengths, Constraints, and Ideal Use Cases

What Veo 3.1 Does Well

- Clip control & continuity: Its extension and first/last frame tools make it easier to preserve object identity and lighting transitions across short sequences.

- In my own testing, when generating continuous motion using three reference images (for example, a character moving between two reference poses), Veo 3.1 reliably maintained the character’s clothing, posture, and background consistency—something that older versions often struggled with.

- Native audio: Audio is integrated directly into the generation process, so you don’t need to manually layer ambiance, dialogue, or Foley.

- While creating a short story clip, I was able to produce a final video with background sounds, footsteps, and subtle dialogue effects straight from Veo 3.1, resulting in a much more natural and immersive experience compared to my previous manually layered versions.

- Traceability: The SynthID watermark supports attribution and protects against unauthorized use, which is especially valuable for content creators and brand projects.

- Consistent toolset: Features such as video extension, object insertion/removal, and scene continuity help maintain visual logic and coherence across multiple clips, making it easier to produce polished sequences without disrupting the story flow.

Constraints to Note

- Clip length limit: Native generation is capped at 8 seconds per clip, so for longer content you’ll need stitching or extension sequences.

- Extension quality: Extended segments run at 720p, which may drop detail if preceding sections are at higher resolution.

- Regional & safety limits: Some regions may have restrictions (especially around person generation) and video retention is limited (e.g. ~2 days before deletion on server side in some documents).

- Latency & pricing unknowns: Google doesn’t publish exact per-second cost or latency statistics in the public materials I reviewed. You’ll want to benchmark under your own load.

Use Cases Where Veo 3.1 Shines:

- Short-form creatives needing tight visual continuity

- Advertisers or product teams who want controlled consistency across shots

- Educators or small teams wanting integrated audio + video in a single generation step

Sora 2 (2025): Strengths, Constraints, and Ideal Use Cases

What Sora 2 Excels At

- Realism and coherence: OpenAI emphasizes improved physical realism — better dynamics, object interaction, and smoother temporal flow.

- Audio support: The model supports synchronized dialogue, ambient sounds, and effects built into video outputs.

- Provenance & safety: Uses visible watermarking, provenance metadata, and stricter likeness/consent controls in the Sora app ecosystem.

- Social integration: Sora 2 is tied to a TikTok-style app, which emphasizes immediate sharing and audience feedback loops.

I ran a prompt “walking through rain” in Sora 2 (via invite) and got a short clip where the raindrops, footsteps splashes, and ambient rain sound were aligned quite closely — better than many previous video models I tested. That said, I still preferred refining voiceover in post for polished projects.

Constraints to Note

- Limited access: As of October 2025, Sora 2 remains invite-only and APIs are not generally open.

- Unknown per-clip limit: OpenAI does not publish a strict maximum for native clip length; longer pieces are generally built by stitching.

- Latency & pricing opaque: There’s no official public per-second billing or latency benchmarks as of now.

- Watermark & output constraints: Sora 2 outputs are watermarked and include traceability signals, but that can limit usability for some commercial projects.

Scenarios Suited for Sora 2:

- Creators wanting high realism and physics fidelity in short clips

- Projects where synchronized audio is essential, even for drafts

- Social-first video strategies, where quick sharing in Sora app is desired

- Users with invite access who want to experiment with next-gen video + audio

How to Choose: Tips Based on Your Project Goals

1. If your video is short-form (≤ 10 seconds)

- Veo 3.1 gives you tighter control via extension and continuity tools.

- Sora 2 may slightly edge realism in motion transitions, depending on your prompt.

2. If your priority is audio + narrative cohesion

- Both handle native audio, but Veo’s integration of sound across its modes can simplify workflow.

- Use Sora 2 if you want detailed ambient or dialogue in draft form and then polish in post.

3. For longer sequences

- Neither system offers fully native long-form generation — you’ll need a multi-clip pipeline.

- Veo’s extension tool is more exposed and controllable.

- Sora 2’s stitch workflows may lean heavily on post-editing.

4. For brand safety, attribution, and compliance

- Veo’s SynthID watermark and OpenAI’s trace metadata both assist provenance.

- If rights or consent are crucial, pick the model whose watermark and compliance tools align with your legal/regulatory context.

5. For accessibility and stability

- Veo via Gemini API / Flow is more broadly accessible in preview stages.

- Sora 2 remains invite-only; workflows and API access are still being rolled out.

In my own tests, Veo 3.1 felt more predictable when bridging multiple shots, while Sora 2 delivered more naturally flowing physics in standalone clips — but I had to manually stitch and level color to connect scenes.

Conclusion

There’s no universal winner — the “better” model depends on your priorities:

- Choose Veo 3.1 when you want controllable continuity, built-in audio, and a toolset bridging multiple reference frames.

- Choose Sora 2 when you have access and value cinematic realism, synchronized audio, and immediate social publishing.

Before committing to one pipeline, I recommend running a pilot test with your core prompts to compare latency, cost, and output consistency in your own production environment.