If your AI-generated images often look unrealistic or inconsistent, you’re not alone. Improving AI image generation accuracy is all about controlling how the model interprets your input — through precise prompts, reference images, and the right generation settings.

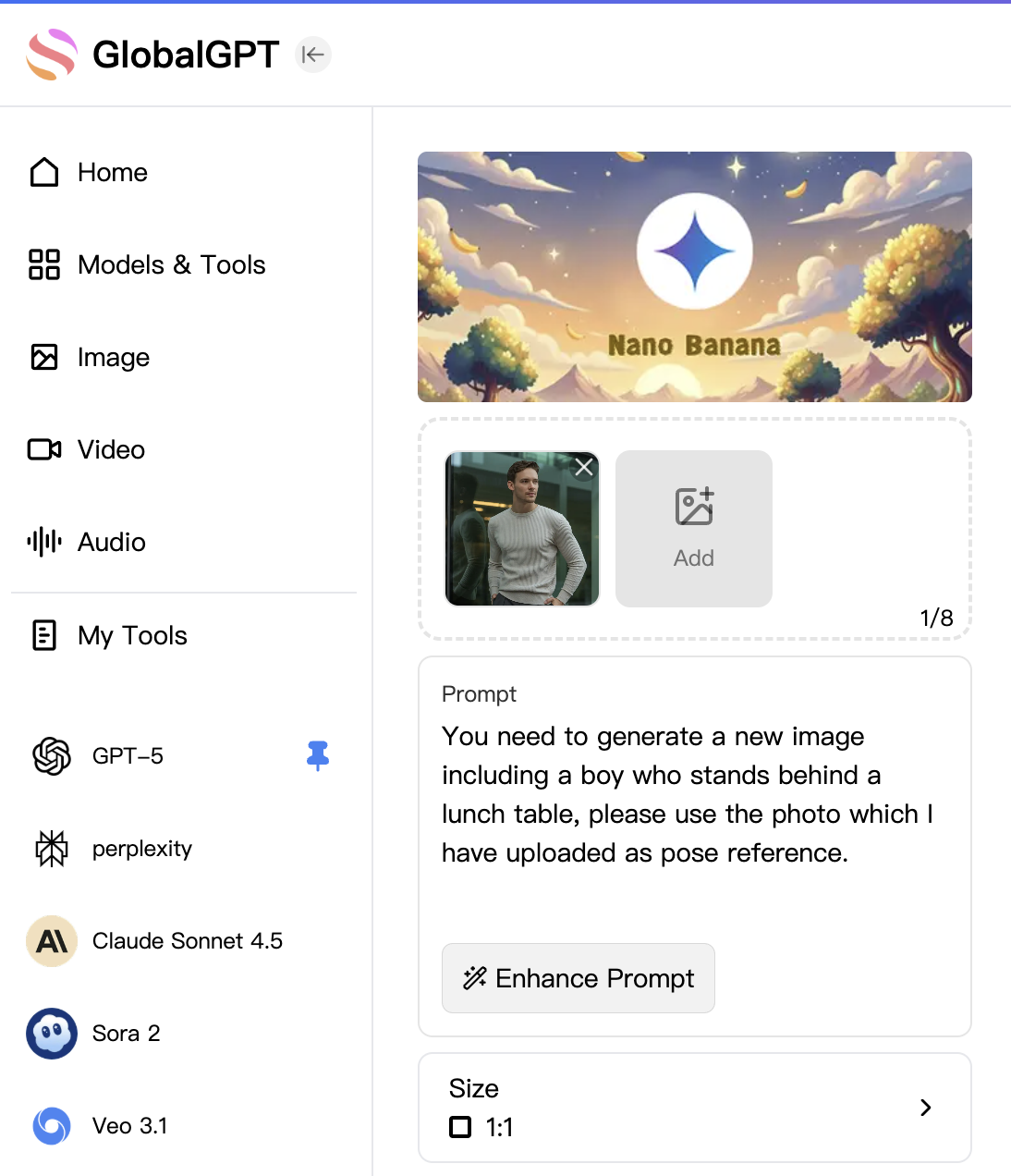

Whether you use Midjourney, Flux, or Nanobana, these proven strategies will help you produce more lifelike and coherent images every time.

By the way, the AI image tools on Global GPT have a built-in prompt optimizer that makes it easy to create higher-quality images.

Understand What “Accuracy” Means in AI Image Generation

Before improving accuracy, you must define it. In AI image generation, accuracy refers to how well the output aligns with your intended subject, composition, and style. It includes:

- Visual precision: Are details sharp and coherent?

- Prompt fidelity: Does the image match your written description?

- Style consistency: Does it maintain the right artistic tone or realism?

Understanding these aspects helps you diagnose whether your issue is with prompt design, model capability, or rendering quality.

Craft Highly Specific Prompts (Avoid Ambiguity)

A vague prompt leads to vague results. To improve image fidelity, describe every essential detail — from subject, action, environment, camera angle, to lighting.

Example:

❌ “A woman in a park” ✅ “A young woman jogging in a sunny city park, soft morning light, cinematic composition, 35mm lens, photorealistic style”

The more structured and context-rich your prompt, the higher the semantic accuracy of the generated image.

Use Reference Images for Higher Visual Precision

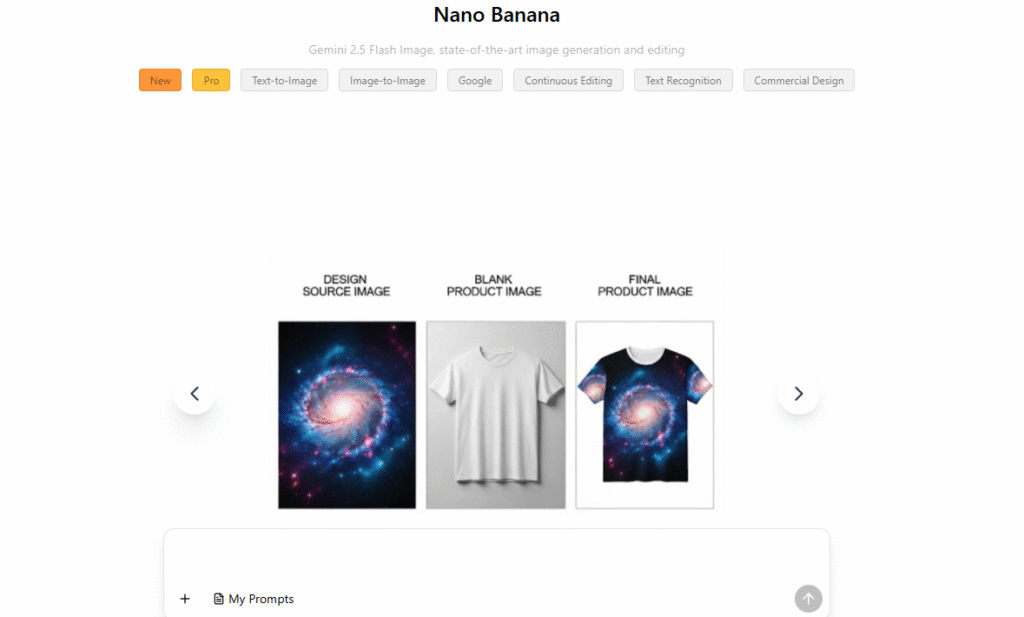

Modern generators like Nanobana, Midjourney, and PerplexityArt Mode allow image-to-image prompting, which drastically improves accuracy. Upload a reference photo that demonstrates your desired pose, lighting, or facial expression.

This technique:

- Guides the model’s composition

- Maintains subject likeness

- Reduces unwanted randomness

📌 Tip: Combine text + image prompts for best results (e.g., “Use this photo as pose reference, but render in fantasy armor style”).

Choose the Right Model and Style Preset

Different AI models specialize in different strengths:

- DALL·E 3: Great for conceptual accuracy and layout control

- Stable Diffusion XL: Best for photorealism and detail control

- Midjourney v6: Ideal for stylized, cinematic results

- Global GPT Image Generator: Integrated multimodal system with model blending for better realism

Choosing the right engine for your purpose — artistic illustration vs. real photo — directly impacts accuracy.

Adjust CFG and Sampling Parameters

In diffusion-based tools like Stable Diffusion, parameters such as CFG Scale and Sampling Steps influence how strictly the model follows your prompt.

- Higher CFG (8–12): More faithful to the prompt, less creative variation

- Lower CFG (5–7): More artistic freedom, lower prompt accuracy

- More sampling steps: Better fine details but slower output

Experiment with these values to find your sweet spot between control and realism.

Use Negative Prompts to Eliminate Artifacts

Negative prompting helps filter out unwanted elements that distort your image accuracy. For example:

“portrait of a man, realistic lighting, –no extra limbs, –no blurry background”

By explicitly telling the model what not to include, you train it to focus on key visual features, improving overall output precision.

Enhance Image Realism with Post-Processing and Upscaling

Even with a perfect prompt, generated images often need refinement. Use AI upscalers like:

- Global GPT’s Smart Refinement Tool

These tools improve sharpness, color balance, and noise control, giving your image professional polish.

For ultra-accurate results, pair upscaling with face restoration tools like GFPGAN or CodeFormer.

Leverage Prompt Templates and Embeddings

Advanced users can improve prompt accuracy with pre-trained embeddings or prompt templates. These are optimized text fragments (e.g., “masterpiece, ultra-detailed, 8k uhd, volumetric light”) that consistently enhance realism.

Many creators share effective templates on communities like Reddit’s r/PromptEngineering, helping you replicate high-quality outputs without starting from scratch.

Fine-Tune or Train Custom Models for Specialized Needs

If you frequently generate similar images — for example, portraits of the same character or branded assets — consider fine-tuning a model or using LoRA (Low-Rank Adaptation) training. Benefits:

- Improved style and subject consistency

- Better understanding of domain-specific prompts

- Reduced trial-and-error in generation

Tools like DreamBooth, LoRA, or Global GPT’s Model Studio make this accessible even to non-coders.

Keep Iterating, Comparing, and Learning

AI image generation is both art and science. The best creators continuously:

- Compare multiple generations

- Refine prompt wording

- Log parameter settings

- Study visual patterns that improve alignment

The more feedback loops you build into your workflow, the more accurate and repeatable your results become.

Final Thoughts: Mastering Accuracy in AI Image Generation

Improving AI image generation accuracy isn’t just about technology — it’s about communication. The clearer and more structured your input, the closer the output matches your imagination.

By combining precise prompts, reference images, and model control, you can consistently produce highly accurate, realistic, and visually stunning AI images — every single time.